Chapter 5. Holonomic based robot for behavioral research

Abstract— This chapter gives introspection to the concept of the MogiRobi – ethological model based autonomous robot. Technically, MogiRobi is a holonomic drive based mobile robot. Due to its ethological and cognitive info communication inspired design, it is able to visualize animal behaviors, high level interactions, and emotions. For evaluating high level interactions with the environment the robot works in an iSPACE (intelligent space). iSPACE is an environment, where external sensor network and distributed computing gives information for robot’s control algorithm. This development shows a viable trend for a novel methodology in the design of mobile service robots in the field of ethological behavior.

5.1. Introduction

There are many researches focused human robot interaction (HMI) in the last decade because service robots work at the same environment as people. This research takes in investigation of HMI from the ethological point of view, based on the 20,000 year old human-dog relationship [3]. The ethologists observing the dog’s behavior from the attachment point of view for the last fifteen years. Based on these observations a new type of artificial intelligent can be created that is able to attach to a specified owner.

5.2. Concept

5.2.1. Etho-motor

The ethologic researchers were investigated the dog’s attachment behavior in the last 50 years. Today, the objective measurement of the attachment behavior is the Strange Situation Test (SST). Observing the dog’s behavior during several SST is the base input of an abstract emotional fuzzy model called etho-motor. This etho-motor keeps the high level control of MogiRobi.

5.2.2. iSPACE

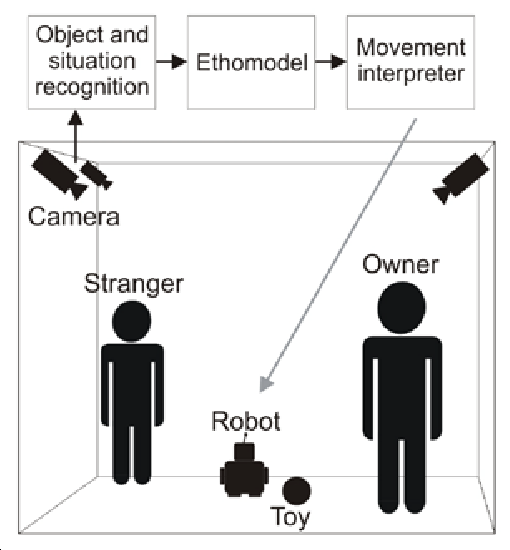

The input information for the control algorithm is provided by iSPACE. ISPACE (Intelligent Space)[2] is an intelligent environment which provides both information and physical supports to humans and robots in order to enhance their performances. This performed by sensor network and distributed computing for preparing the rough sensor signals. During the behavioral experiments with the robot, the owner, a stranger and a toy take place in iSpace, see in Fig. 5-2. The operation depends on the behavior of the owner and the stranger.

5.2.3. Behavior

Ethologically inspired research at the human-dog relationship and interaction suppose that dogs have evolved behavioral skills in the human environment. This social evolution increased the chances to survive in the anthropogenic environment. [5] Dogs use visual (e.g. tail, head and ear movement) and acoustic cues (e.g. barking, growling and squeaking) to express their emotions. Dogs also combine these methods to communicate with humans. Humans are able to recognize dogs' basic emotions without much specific experience. [6] In the view of our engineering methods, we do not provide an emotional model for the robot, but we provide a device to implement the model. The expression of the robot’s emotional behavior can be seen in Fig. 5-3 and Fig. 5-4 when the robot is sad or happy. We consider the analogy between the dog and the robot. We design human-robot interaction with the human-dog interaction analogy. Humans are able to recognize emotions on the robot from the main parameters of dog attitudes.

5.2.4. Drive system of the robot

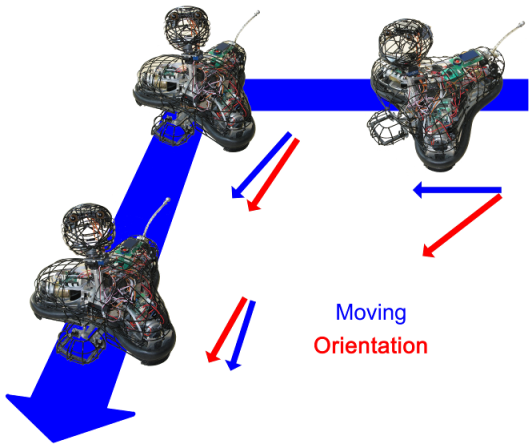

At industrial environments non-holonomic robots are mostly appropriate for industrial tasks. But in case of social and service robots a further investigation is needed about the drive concept. The goal is to create a robot that can be easily accepted by people like a dog. In fact, the dog and the most of the animals have holonomic movement. The holonomic robots and dogs has an additional property that the moving and looking direction can be different during reaching a target on a non-straight trajectory (see Fig. 5-5). The moving and looking direction is always different in the case mentioned above. That is very a strange behavior if a non-holonomic driven robot looks away from it’s target during reaching it.

Based on this, we assume that the holonomic drive can be better for service robots for expressing emotional behavior by movement and looking during reaching a target. This holonomic property can be hardly achieved by a legged robot because of its high power consumption, and extensive engineering work needed for evaluating. Therefore the use of holonomic wheels (omni-wheels) is the compromise solution for MogiRobi.

5.3. Technical design of MogiRobi

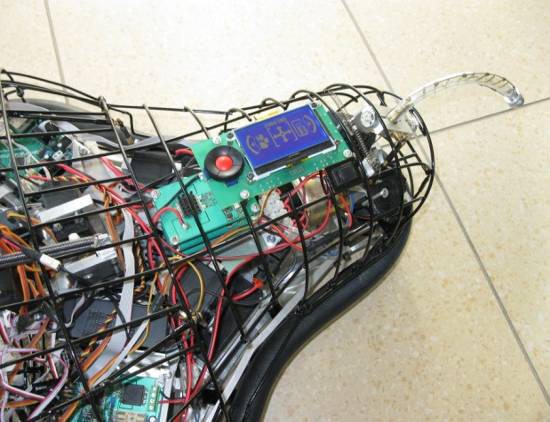

5.3.1. The basement of the robot

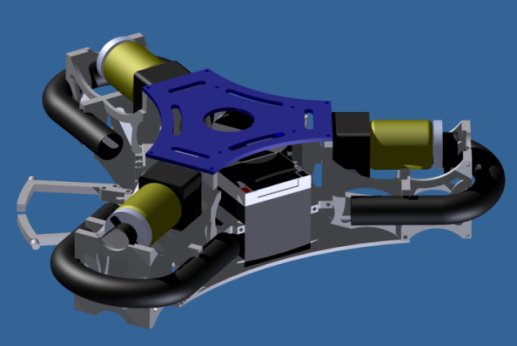

Instead of legs the structure has a holonomical basement. It’s a new concept for mobile robot drive systems. The robot movement with legs would require much more power than rolling on wheels, and the movement with legs is more complicated and expensive. Smooth motion on legs would require complex control algorithms, expensive mechanical construction in more than one version. The most important factor at the design of the robot movement was the ability for motion in all planar degree of freedom. With omni-directional drive the robot can go to the reference position and orientation, in every direction at a time like a dog, not only forward and backward like differential drive or car like drive systems. Omni wheels are wheels with small discs (smaller wheels) around the circumference which are perpendicular to the rolling direction. (Fig. 5-8) This mechanical construction will result that the wheel can roll with full force, but also slide laterally with great ease. The three wheels are driven by three DC motors through driving gears, and belt transmissions. The robot can reach the 2 m/s top speed (7,2 km/h) in any direction. As Fig. 5-6 and Fig. 5-7 show, the motor shafts can be found around a circle swith the direction to the center point. The shafts are enclosing 120o. With this type of configuration we can get a 3 DoF holonomic system (x, y, rot z). The most of the overland animals can move in 3DoF: turning and moving in one direction in the same time. [4] The ordinary drive systems (steered wheels, differential drive) cannot perform this. The platform of MogiRobi can deliver 20 kgs with the maximal speed. It has about 0.25 kWatt DC motor power. It has 2 hours working time with one accumulator setup at normal use. There are incremental encoders on each motor shaft. From the signal of the incremental encoders and from the kinematics model of the robot we can calculate the x, y, rot z coordinates, during the path from point A to B. Also, we can plan the path between two relative reference points with interpolation. The animal type movement (acceleration, speed, path) could be implemented with the control algorithm in embedded system. The Motherboard on the robot basement can get relative position, orientation, absolute speed, acceleration references for the movement, and other peripheral references through Bluetooth port. The peripheral electronics can be connected via serial peripheral interface (SPI).

5.3.2. Body

The robot has a wired body, moved by two RC servos up and down. With this part the robot can behave like cats at aggressive behavioral attitudes.

5.3.3. Head

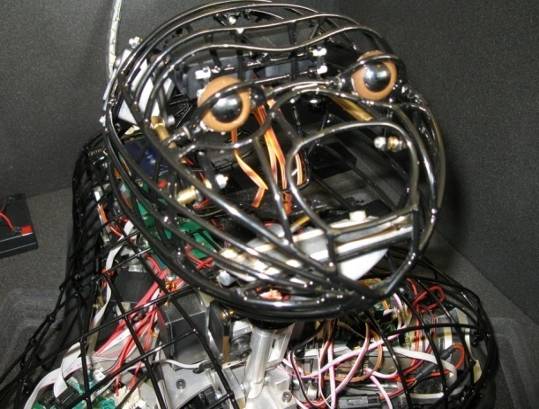

The head of the robot can be seen in Fig. 5-11, it has 4 DoF-s. It can be moved by the neck of the robot around the arc of the body. It connected to the body with a ball joint. The ball joint has 3 DoF-s (rot x, y, z). The head moves around the ball joint, with 3 RC servo drives. (Fig. 5-9 and Fig. 5-10) Two of these can be found on the neck for the rot x, y, and one in the head for the rot z DoF. (Where Z is the perpendicular axis.) The head has two ear, and eyelashes, also moved by compact servo drives.

5.3.4. Gripper

The gripper can be seen in Fig. 5-12 it has a very important role when we play with the robot. The robot can fetch a ball with it.

5.3.5. Tail

The tail has an important role at the visualization of the emotions. The mechanical construction of the tail can be seen in REF _Ref386997877 \h and Fig. 5-14 One servo drive moves the plastic tail through two wires. The tail was bended to the body with springs. The oscillating mechanical system for the wagging movement is excited by a DC servo drive. The damping of the system is negligible. With the test of several springs and control algorithms we could tune the system for acting like animal tail movements. These small factors are very important at the acceptance of the robot for children, elders, etc.

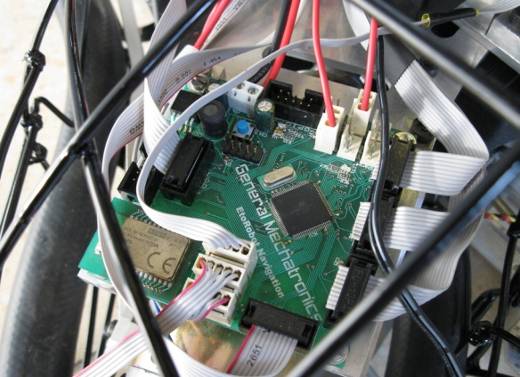

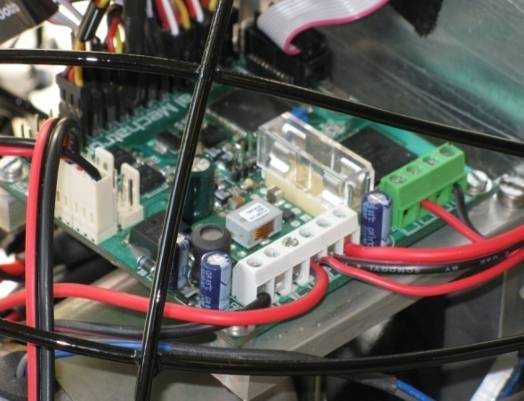

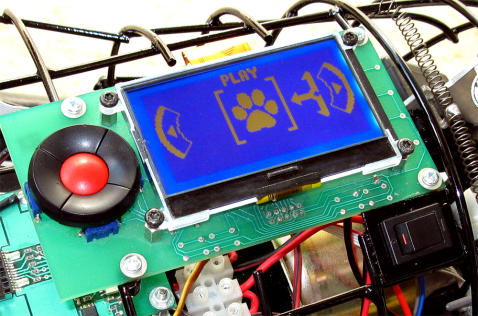

5.3.6. Control and power electronics

Each control board has one processor. The first is a simple 8-bit RISC Xmega processor from Atmel. () It can handle the communication with the server via Bluetooth. It also handles the motor control loop and the high level position calculation. It can send the reference angle of the servos to the servo board and handles 3 encoder channels for odometry. The servo control board can be seen on figure 17, it has 15 output channels for compact RC servos. In case of RC servos it is very important to use isolated power supply. The servo control board provides PWM signals for the RC servo drives. The servo drives have strongly limited accelerations for eliminating transient disturbances during movement. All servo trajectories calculated with low symmetrical acceleration profile, giving a smooth motion profile for the robot. The nominal power parameters of the PWM amplifier board are three times 24V/10A. We are using special automotive compatible ICs with current feedback, over current sense, and also a temperature sense. On the back of the robot we can found an LCD with control buttons, see figure 18. We can choose between the control methods. It can run self-test, or check the information, battery states.

The robot can be in play mode, where it gets the control commands from an external PC via it’s Bluetooth interface. At jogging mode, we can control the robot with the buttons. At self-test, the robot checks all of the peripheries, and servo drives.

5.4. Conclusion

The goal of this paper was to suggest mechanical and control methods for a social robot in the iSpace conception. The control methods could be implemented, and tested in different situations. MogiRobi controlled with simple orders from the fuzzy logic system and worked at the iSpace conception with promising results. It is an easily built and well designed adaptable structure for many application areas at social robotics. The robot can cooperate with dogs and humans also. At the scenarios the emotions of the robot always recognized. It’s proved that the mechanical and control methods of MogiRobi were appropriate for Human-Robot, Dog-Robot interaction. The suggested mechanical structure was worked successful during emotion recognition, and cognitive communication.